What is Epoch in Machine Learning?

Topics:

Epoch in Machine Learning

What Is Epoch?

Example of an Epoch-Stochastic Gradient Descent

What Is Iteration?

What Is a Batch in Machine Learning?

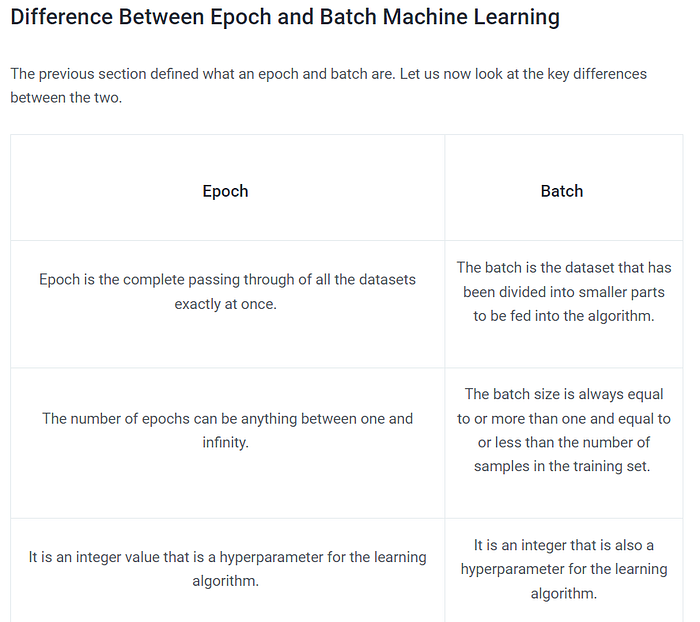

Difference Between Epoch and Batch Machine Learning

Why Use More Than One Epoch?

“Epoch” a Machine Learning term, and discuss what it is, along with other relative terms like batch, iterations, stochastic gradient descent and the difference between Epoch and Batch. These are must-know terms for anyone studying deep learning and machine learning or trying to build a career in this field.

Epoch in Machine Learning

Machine learning is a field where the learning aspect of Artificial Intelligence (AI) is the focus. This learning aspect is developed by algorithms that represent a set of data. Machine learning models are trained with specific datasets passed through the algorithm.

Each time a dataset passes through an algorithm, it is said to have completed an epoch. Therefore, Epoch, in machine learning, refers to the one entire passing of training data through the algorithm. It’s a hyperparameter that determines the process of training the machine learning model.

The training data is always broken down into small batches to overcome the issue that could arise due to storage space limitations of a computer system. These smaller batches can be easily fed into the machine learning model to train it. This process of breaking it down to smaller bits is called batch in machine learning. This procedure is known as an epoch when all the batches are fed into the model to train at once.

What Is Epoch?

An epoch is when all the training data is used at once and is defined as the total number of iterations of all the training data in one cycle for training the machine learning model.

Another way to define an epoch is the number of passes a training dataset takes around an algorithm. One pass is counted when the data set has done both forward and backward passes.

The number of epochs is considered a hyperparameter. It defines the number of times the entire data set has to be worked through the learning algorithm.

Every sample in the training dataset has had a chance to update the internal model parameters once during an epoch. One or more batches make up an epoch. The batch gradient descent learning algorithm, for instance, is used to describe an Epoch that only contains one batch.

Learning algorithms take hundreds or thousands of epochs to minimize the error in the model to the greatest extent possible. The number of epochs may be as low as ten or high as 1000 and more. A learning curve can be plotted with the data on the number of times and the number of epochs. This is plotted with epochs along the x-axis as time and skill of the model on the y-axis. The plotted curve can provide insights into whether the given model is under-learned, over-learned, or a correct fit to the training dataset.

Example of an Epoch

Let’s explain Epoch with an example. Consider a dataset that has 200 samples. These samples take 1000 epochs or 1000 turns for the dataset to pass through the model. It has a batch size of 5. This means that the model weights are updated when each of the 40 batches containing five samples passes through. Hence the model will be updated 40 times.

Stochastic Gradient Descent

A stochastic gradient descent or SGD is an optimizing algorithm. It is used in the neural networks in deep learning to train machine learning algorithms. The role of this optimizing algorithm is to identify a set of internal model parameters that outperform other performance metrics like mean squared error or logarithmic loss.

One can think of optimization as a searching process involving learning. Here the optimization algorithm is called gradient descent. The “gradient” denotes the calculation of an error gradient or slope of error, and “descent” indicates the motion along that slope in the direction of a desired minimum error level.

The algorithm enables the search process to run multiple times over discrete steps. This is done to improve the model parameters over each step slightly. This feature makes the algorithm iterative.

In each stage, predictions are made using specific samples using the current set of internal parameters. Ther predictions are then compared to the tangible expected outcomes. The error is then calculated, and the internal model parameters are updated. Different algorithms use different update procedures. When it comes to artificial neural networks, the algorithm uses the backpropagation method.

What Is Iteration?

The total number of batches required to complete one Epoch is called an iteration. The number of batches equals the total number of iterations for one Epoch.

Here is an example that can give a better understanding of what an iteration is.

Say a machine learning model will take 5000 training examples to be trained. This large data set can be broken down into smaller bits called batches.

Suppose the batch size is 500; hence, ten batches are created. It would take ten iterations to complete one Epoch.

What Is a Batch in Machine Learning?

Batch size is a hyperparameter which defines the number of samples taken to work through a particular machine learning model before updating its internal model parameters.

A batch can be considered a for-loop iterating over one or more samples and making predictions. These predictions are then compared to the expected output variables at the end of the batch. The error is calculated by comparing the two and then used to improve the model.

A training dataset can be broken down into multiple batches. If only a single batch exists, that all the training data is in one batch, then the learning algorithm is called batch gradient descent. The learning algorithm is called stochastic gradient descent, when an entire sample makes up a batch. The algorithm is called a mini-batch gradient descent when the batch size is more than one sample but less than the training dataset size.

Why Use More Than One Epoch?

An epoch consists of passing a dataset through the algorithm completely. Each Epoch consists of many weight update steps. To optimize the learning process, gradient descent is used, which is an iterative process. It improves the internal model parameters over many steps and not at once.

Hence the dataset is passed through the algorithm multiple times so that it can update the weights over the different steps to optimize learning.

If you like this Article you can comment and hit like and Comment in Comment Section

Hence the dataset is passed through the algorithm multiple times so that it can update the weights over the different steps to optimize learning.